LiteSwitch SSP-Hosted Setup Guide¶

The purpose of this document is to give an overview and detail of the LiteSwitch SSP-hosted installation in partner infrastructure.

Glossary¶

Docker: an open platform for developing, shipping, and running applications. Docker can package and run an application in a loosely isolated environment called a container.

Docker Image: a read-only template with instructions for creating a Docker container. An image can be based on another image, with some additional customization.

Docker Container: a runnable instance of an image. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state. By default, a container is isolated from other containers and its host machine. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine.

Docker Daemon: a persistent background process that manages Docker images, containers, networks, and storage volumes.

Load Balancer: device or service routing client requests across all servers capable of processing those requests.

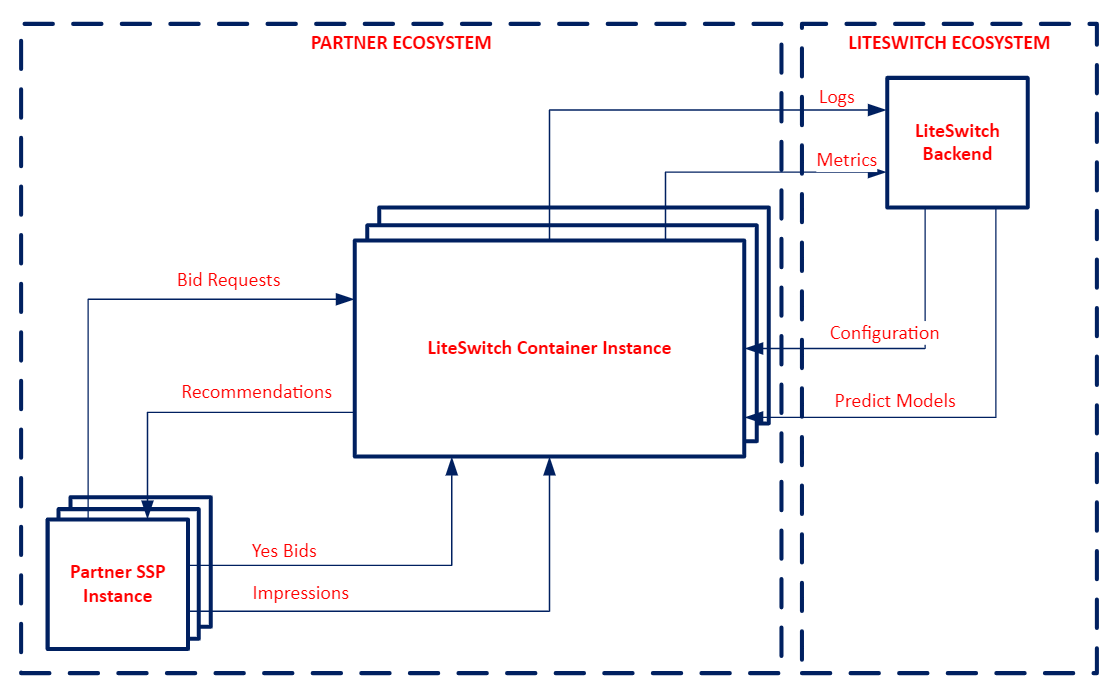

Partner Ecosystem Overview¶

Shipment¶

The LiteSwitch team will notify you whenever the LiteSwitch Container in the Artifactory Registry is upgraded to a latest version.

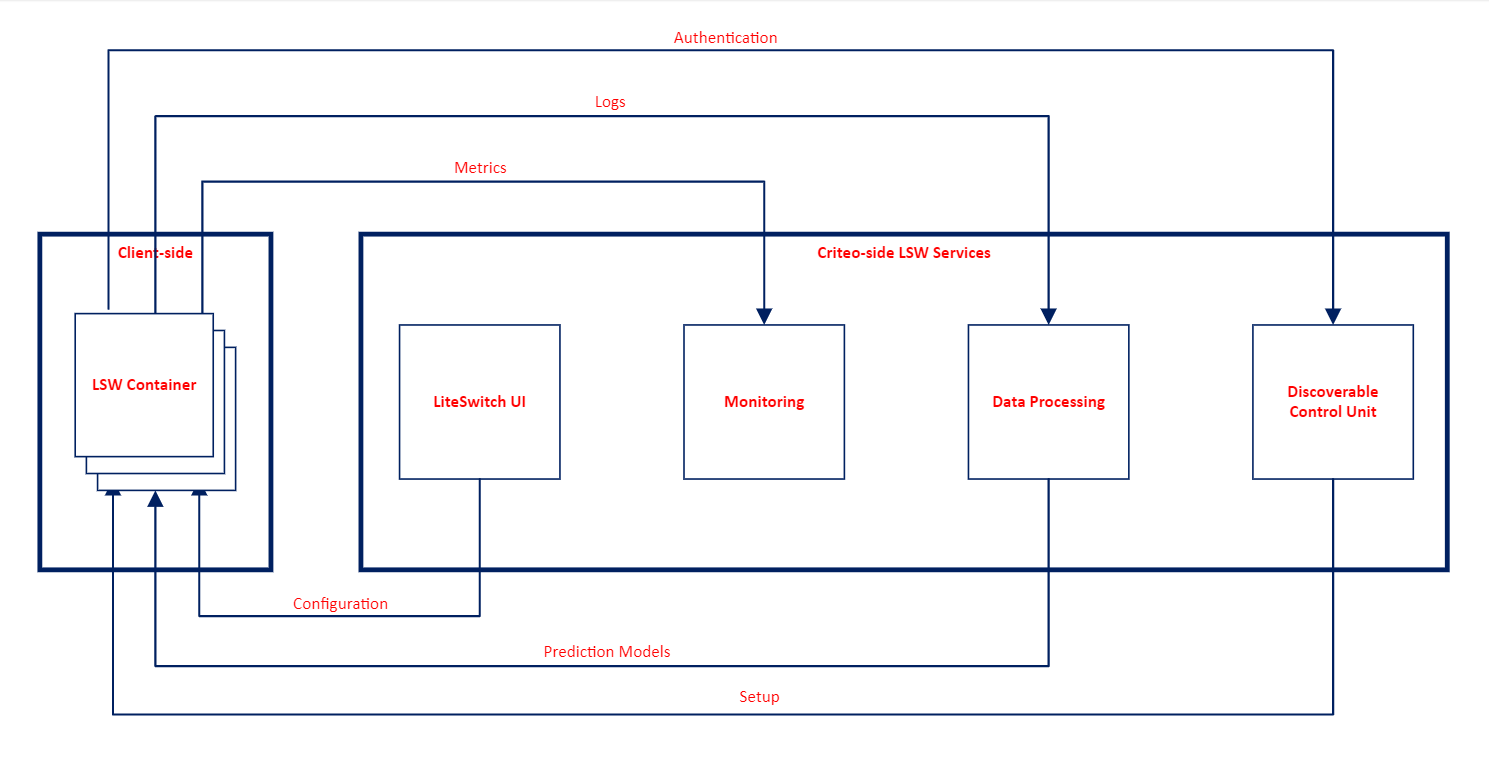

Master Agent is the main inner process. It authenticates the instance on the LiteSwitch backend, sets up and launches the server, establishes the rotation of telemetry towards backend.

LiteSwitch Server is the server receiving recommendation requests from the partner Supplier.

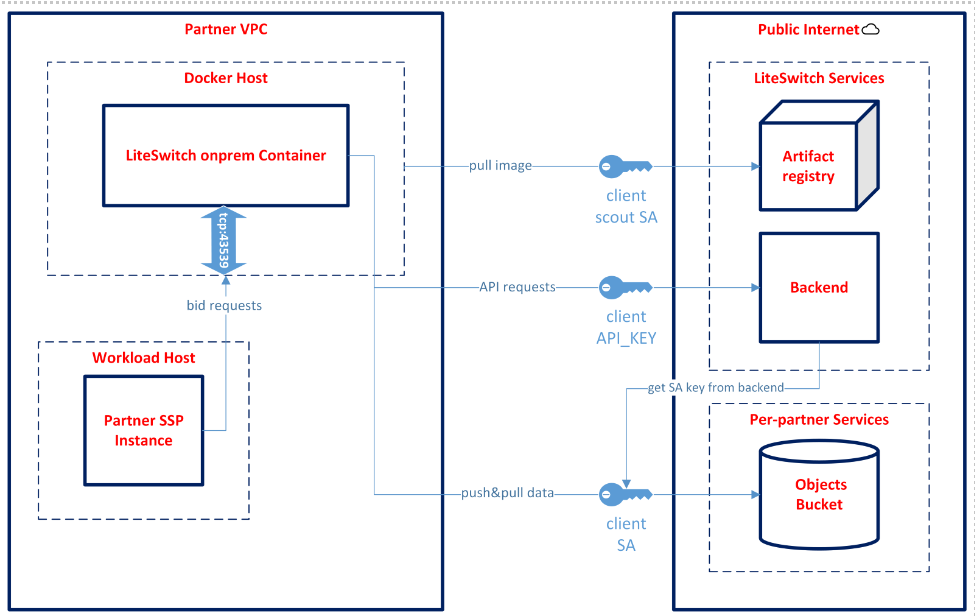

The Docker image is published to the Google Artifact Registry owned by Criteo:

us-east1-docker.pkg.dev/iow-liteswitch/uverse-docker-local/tenant/liteswitch/liteswitch-onprem/releases:x.y.z

The LiteSwitch team provides you with a dedicated Google scout service account with access to the Artifact Registry.

Scaling¶

Several containers are expected to be set up in a pool of server instances, requests are expected to be routed by load balancers, e.g. DNS balancer. The horizontal approach is used for scaling; the more traffic needs to be processed the more servers are added to the pool.

The minimum system requirements for each container are as follows:

Min 8 Core CPU

Min 8 Gb RAM

The required number of instances is defined by the following factors:

The instance type (CPU & memory)

The number of ad slots and wseats (buyer seats allowed to bid) in requests

See below the list of Google instances with optimal QPS per core:

N2

N2D

Upgrading¶

The LiteSwitch team will notify you whenever the LiteSwitch Container in the Artifactory Registry is upgraded to a latest version.

Additionally, you can use a cut version of the tag, such as x.y (for example: liteswitch-onprem/releases:0.1), which provides you with the newest z (patch) version. This approach ensures your permanent access to unbreakable bugfix changes and security updates. Please use the docker run --pull option, or simply perform a docker pull right after restarting to get the new image.

LiteSwitch instances in your ecosystem are expected to be upgraded by you. Upgrades include setting up a new pool of containers and directing traffic to this new pool.

Container Setup¶

To set up a Docker container, proceed as follows:

The launch of a Docker image requires an x86 machine with a working setup of the Docker daemon. The machine must be connected to the public internet, allowing Docker to connect to port

43539on the host network interface.Perform a Docker login for access to the Google Artifact Registry. This can be done using the Scout GCP service account created individually for each Partner along with a private SA key. Obtain your JSON private key and save it on the machine in a secure path the LiteSwitch team will send it to you by email.

When the Docker registry login is set up and all the Docker daemon and its machine requirements met, the LiteSwitch team provides the Partner with the required ENVs (find the detailed list in Appendix 1: Credentials and Appendix 2: Container Startup) for the Docker run command. Also, we should connect the container port to the machine to enable our ingress traffic.

Monitoring¶

The state and health of a container can be checked using /status handler. If the server is up and running, the handler will respond with the standard OK. This handler can be used at the server launch as to make sure the server is ready to process requests; ongoing health checks are also performed using this handler.

To prevent the server from resource exhaustion, failed save logic is used. By this logic, the server will drop requests from processing when running out of resource. This logic is enabled by default with common parameters, but the outcome will differ for different instance types and shall be fine-tuned.

The volume of dropped requests is a performance metric not expected to exceed the agreed percentage of all requests. The default volume is 5%. When failed save logic is enabled, the server responds as follows:

HTTP response code:

503Response body:

ServiceUnavailable: too many requests

To speed the process up, upon your request, the LiteSwitch team can provide metrics, including the volume of dropped requests, directly from the LiteSwitch Server; you are expected to set up monitoring and autoscaling on your side. You are also expected to monitor resources and report any unexpected behavior to the LiteSwitch team.

Interface And Data Flow¶

Integration Setup¶

To integrate with LiteSwitch SSP-hosted, proceed as follows:

Use the LiteSwitch UI or API to configure your partner Suppliers and Buyers. Direct access to the UI/API will be provided after the initial integration, therefore LiteSwitch team will assist with the configuration during the setup. Refer to LiteSwitch API for details.

Set up sending requests and receiving recommendations. Refer to Integration Overview for details.

Launch the SSP-hosted solution in your infrastructure.

Data Rotation¶

Appendix 1: Credentials¶

The LiteSwitch team provides you with the following credentials:

Google service account with access to LiteSwitch containers in Google Artifact Registry

API key for LiteSwitch Container to register with LiteSwitch Backend and setup

You are also provided with the following data:

LiteSwitch Backend URL (

LSW_ONPREM_BACKEND_HOST)

Appendix 2: Container Startup¶

GCP Artifact Registry Login with Docker¶

Please proceed as follows:

Login to the GCP with your scout service account key using the following commands:

mkdir $HOME/.config/gcloud/

vi $HOME/.config/gcloud/liteswitch_onprem_scout.json

# paste the content of the scout service account key to the file

gcloud auth activate-service-account --key-file=$HOME/.config/gcloud/liteswitch_onprem_scout.json

gcloud auth configure-docker us-east1-docker.pkg.dev

Pull the Docker image with the following command:

docker pull us-east1-docker.pkg.dev/iow-liteswitch/uverse-docker-local/tenant/liteswitch/liteswitch-onprem/releases:x.y.z

For more information, please read the GCP Artifact Registry documentation.

Running the Docker Image¶

Create the agent.env file containing the required parameters for LiteSwitch agent to start:

vi agent.env

# file containing the necessary parameters for LSW agent to start:

LSW_ONPREM_SSP_ID=<PARTNER>

LSW_ONPREM_API_KEY=<KEY>

LSW_ONPREM_LOCATION=<LOCATION>

LSW_ONPREM_BACKEND_HOST=<HOST>

LSW_ONPREM_AUTH_PASSWORD=<PASSWORD>

Now you are ready to run the LiteSwitch container:

docker run --env-file agent.env --name liteswitch-services -d -p 43539:43539 us-east1-docker.pkg.dev/iow-liteswitch/uverse-docker-local/tenant/liteswitch/liteswitch-onprem/releases:x.y.z

To check the logs, use the following command:

docker logs liteswitch-services -f

Wait for the Started onprem agent service server on port 42285 message to appear.

Note

You can also forward port 42285 for the Docker container and then perform curl http://localhost:42285/status until it responds with OK

The server is ready to start processing requests upon receiving the OK response.

curl localhost:43539/status

OK